Friday 16 October 2015

Thursday 4 June 2015

Questioning What Was Asked: Why There's More to a Poll than Just Its Findings

Whether it’s about an upcoming election, attitudes

towards race in the criminal justice system, or which celebrity will take home

the award for best performer – people love polls!

But how much do they really love them? Do they love them

enough to care about what goes into making them?

Otto Von Bismark once said that “Laws are like

sausages, it is better not to see them being made”. However, I would like to paraphrase what Bismark

said and say that polls are

akin to sausages in that people like to consume them but don’t really care much

to see how they’re made.

It’s easy for someone (myself included) to discover a survey

or study in the news, immediately generate an opinion about the subject matter,

and move on without really asking how those findings were reached. However,

the information to which an individual is exposed has the probability of influencing

their attitudes, opinions, and even future behaviour.

In a recent article

in Public Opinion Quarterly, Kevin K. Banda, a Political

Science professor at the University of Nevada-Reno stated that “citizens want

to collect adequate information to make accurate decisions while minimizing the

costs they face. This accuracy motivation along with citizens’ disinterest in

politics encourages them to rely on easily accessible cues when forming

attitudes about candidates”[i].

These accessible cues Banda refers to are alternatively known as heuristics or

a kind of cognitive short-cut.

Simply put: polls are heuristics. They’re easy to

absorb snapshots of what the public thinks, and these snapshots generate

opinions.

Questionnaire design is an essential part of an

opinion poll. Researchers go through great pains considering how the use or

removal of even a single word can influence someone’s response to a specific

question. It is important for consumers

of polling data to understand this and journalists can help educate readers by

including question wording directly in

as opposed to alongside their

articles.

When Patrick Brown won the Progressive Conservative

Party’s leadership contest, the Toronto Star published the findings of a Forum Research poll

which asked respondents a series of questions about their attitudes towards

Brown and some of the views that he has championed during his political career.

The article reported attitudes towards provincial voting intentions, creationism,

same-sex marriage, and the sex-education curriculum. While

it is appreciated and encouraged that the Toronto Star provided a link to Forum

Research’s press release, the columnist failed to disclose in their article

what questions Forum asked. I use this article as an example to highlight the

importance of disclosing survey questions in media publications and not as a

means to criticize the Toronto Star or the respective columnist directly.

The Market Research and Intelligence

Association (MRIA), the regulatory body within the polling and market research

industry, states in their Code of Conduct that for all reports of survey

findings the Client (in this case the Toronto Star) has released to the public,

the Client must be prepared to release the following details on request:

- Sponsorship of the survey

- Dates of interviewing

- Methods of obtaining the interviews (telephone, Internet, mail or in-person)

- Population that was sampled

- Size description and nature of sample

- Size of the sample upon which the report is being released

- Exact wording of questions upon which the release is based

- An indication of what allowance should be made for sampling error

The

Toronto Star article in question met three of the eight requirements above:

method of contact (in this case IVR), the sample size (1,001 people), and the

allowance for sampling error (considered accurate to within three

percentage points, 19 times out of 20).

I understand that it’s

awkward for a journalist to include methodological minutiae such as the dates

that the survey was in the field or perhaps going deep into a firm’s sampling

frame. Being a journalist in not easy! They are subject to tight and frequent

deadlines, word counts, constant research and fact-checking, and of course

writing a story that people will want to read. Question wording, however, is

not a methodological triviality and if a journalist is willing to report the

findings of a question, they should at least include the question that was

asked.

I applaud and encourage journalists

for incorporating polling data in their publications. However, my criticism

lies in the fact that readers are not being told the whole story when question wording is withheld. A journalist may

believe that they are informing their readers by including a firm’s press

release alongside their article, however, Banda’s comments on heuristics has

shown that the probability of a reader following up on external information to

further their knowledge (in this case learning what questions were asked) is

low.

By including what was asked

directly into the article, a journalist enables a more potent heuristic for

people to digest without placing a significantly greater amount of effort on

the reader. If the questions asked (in this case voting intentions, same-sex

marriage, etc.) were included in company with the poll’s findings in the

article, then readers would be able to acquire a more comprehensive and

three-dimensional view of what public opinion is like in Ontario because they have

a better idea of where these attitudes and opinions are coming from.

Market researchers and

pollsters spend a lot of time thinking about what questions to ask on a survey, how to frame these questions, when

and where to ask these questions

in the survey, and why these

questions were asked. If journalists are in the business of selling sausage,

they should make clear to their readers a little bit more about what goes into

making them.

[i] Banda, Kevin K. "Negativity and Candidate

Assessment." Public

Opinion Quarterly 78.3 (Fall

2014): 707-20. Print.

Friday 15 May 2015

Polling for the Party: How Public Opinion Influences Political Strategy

Polling

is a powerful and important tool that political parties use to understand how

the public perceives candidates, issues, or other important matters that can

affect the outcome of an election. However, the research that political parties

conduct, known as internal polling, is rarely released to the public and is

shroud with mystery. The aim of this article is to remove some of the fog of

war surrounding the topic of internal polling and provide readers with some

insight as to how internal polling works.

I

was fortunate enough to interview John Corbett CMRP, FRSS of Corbett Communications to learn more about

how political parties use polling and research to help shape their playbook.

John has worked for a number of political parties and has decades of experience

within the world of polling and market research. Below, the reader will find my interview with John

that took place on May 13th, 2015.

Adrian: So let’s begin with a rather simple question: what

exactly is internal polling?

John:

It’s the polling a party does to see how some candidate is doing and how the

other candidates are doing. It doesn't share it with the public except in

extreme circumstances. It’s usually done by a trusted party pollster whose been

doing it for a long time. That’s the only way it really differs from regular

polling: it tends to be done with a lot more rigour and it’s done internally,

it’s not shared with the public.

Adrian:

What is an example of

something that a party would really want to know? Alternatively, what is an example of

something a party just couldn't be bothered with?

John: Well,

they obviously want to keep an eye on the horse-race but they aren't really too

interested in that. They know that their success at the ballot box is not going

to be measured in their polls but their Get-Out-The-Vote effort. So they tend

to be very interested in issues – issues that are being debated, they’ll be

very interested in how a candidate did at a debate or after a debate, and

they’re very interested in what are the doorstep issues that people want to

discuss. They use polling to craft the message.

Adrian:

How often do political

parties use polling as a means to develop policy platforms or communications

strategies?

John:

All the time.

Adrian: From your experiences, is there an increase in this

type of polling in an election year or are these topics being polled on a

continuing basis?

John:

From what I know, parties will keep their toes in the water in-between

elections but they don’t really ramp up the polling because it’s costly. It’s

not inexpensive and they’re polling every night during an election campaign. So

they tend to start off about a month before they think the writ is going to

drop, or you know in the case of a snap election the moment the writ drops and

continue till the end of the campaign period.

Adrian:

Is there a significant difference in a

pollster’s workload in an election year as opposed to half-way through a

majority government?

John:

Oh god yes! Election years are like Christmas, New Years, and Easter all rolled

up into one!

Adrian:

How does your workload differ when you’re working for a party that is in opposition

as opposed to when you’re working for the party that has formed government?

John:

There’s really not much difference. They still want to know what people think

of the doorstep issues. You still want to know whether your message is getting

out and whether it’s being heard. In a campaign situation, a lot of what you’re

doing is not actually polling – it’s voter identification. A lot of it is “Are

you planning to vote for us, sir? Thank you very much. Add him to the

database”. So a lot of that goes on as well but it doesn't really matter if

you’re polling for the government or the opposition. It’s basically the same

principle.

Adrian: When a political party puts a research team together, do

they tend to hire individual consultants or are they more likely to hire a firm

to conduct their research?

John:

Well, the infrastructure necessary to do the volume of work that they are

calling on really only belongs to a couple of large firms. There’s not that

many people who can supply the work.

Adrian: What do parties look for when they hire pollsters – is

there a lot of politics involved?

John:

There’s a certain amount of politics involved. There are pollsters who have

worked for one party longer than another party. But really what you’re looking

for is accuracy and the quality of the work that they deliver. Quality control in

the phone room and accuracy of the poll results - rigid quality control.

Adrian:

When would you want to conduct qualitative

research and when would you want to conduct quantitative research?

John:

You might conduct some focus groups at the beginning of a campaign or in the

middle of your mandate. If you’re the government you’re probably conducting

focus groups pretty regularly. But once it gets down to the nitty-gritty of the

campaign you really don’t have time for that, you’re doing quantitative polling

every night.

Adrian: How often do party-pollsters pay attention to publicly

released polls or the polls that you see in the media?

John:

Not much. They really rely on their internal polling.

Adrian: Each party will have a different budget for polling and

research based on how much money they have in their war chest, but what are you

able to tell us about the resources available when working for a party as

opposed to a corporate client? By that I mean do parties ask pollsters to do

more with less?

John:

Oh god yes. And then it’s always tough getting paid. Even if you win you know,

you have to be very careful to get at least a big portion of your payment up

front because once the campaign is over you might as well join the line –

you’re just another creditor.

Adrian: What are some examples of the hiccups or problems that a party-pollster

might have to deal with when working for a political party? Here’s an example

of what I mean by that: has there ever been a situation where a party thinks they have a good policy or

strategy from their research and it turns out to be a flop or even backfires?

John:

It happens a lot, you know. In the US the perfect example would be Mark Penn of

Penn, Schoen & Berland

Associates. He liked to impose his own values on the subjects he was polling

and it led to some disastrous results for the people he worked for.

Adrian: So I use this term loosely but the ‘inherent biases’

of the pollster influenced their performance?

John: Yeah. Some party pollsters think they’re stars, you know?

They think they’re policy makers. They’re not. You know they’re measuring guys,

they’re not policy makers. And the ones who think they are (policy makers) can

often get in the way. Not so much so in Canada.

Adrian: In some elections,

we've seen political parties disclose or 'leak' some of their internal polling

data. We saw this with the CAQ in the most recent Quebec election. Why or when

would a party do this? Is it a wise strategy?

John:

No, it’s never smart because no one pays attention to internal polling.

Everyone knows that if the polling has been leaked it’s because there’s some

kind of spin the party wants to put on it. It’s usually a move of desperation.

The party that is leaking their internal polling is either hoping to gain the

result or isn’t doing very well and is hoping to get something remotely

possible. It really has nothing to do with what you’re doing – that’s not your

audience.

Adrian: Besides things like cross-tabulations, what are some examples

of the statistical or analytical tools a pollster would use when performing

quantitative research for a party?

John: I’m not really the guy to ask about that, I’m more the issues

guy. You know, you would use factor analysis, because if you had a number of

different factors and you wanted to build the best possible combination without

testing them all you use factor analysis. You might use cluster analysis,

segmentation analysis to do a segmentation of the voters and look at them by

stereotypes – voter stereotypes. Things like that. There are more sophisticated

methods than that but like I said that’s not my specialty.

Adrian: How often would a political party poll their base as

opposed to the public at large?

John:

All the time. Both, all the time. Certainly from the moment the writ is dropped

they’re in the field every night.

Adrian: Besides maybe during a by-election, how often would a

political party conduct constituency-level polling?

John:

Quite a lot. They’ll supply a certain amount of support for riding associations

to do polling. They do that quite a lot. But Get-Out-The-Vote polling is a form

of riding polling.

Adrian: From your time spent in the industry, how has internal

polling changed over the years? What are parties looking at now that they

weren't looking at 5 or 10 years ago? Has

technology changed the way parties conduct

research?

John:

Well, they’re not using online polling that’s for sure. The people who vote

don’t tend to be the people who are online. They do recognize that response

rates are going down and they’re going to have to deal with that. I've heard a

couple of people I know in the industry talking about returning to door-to-door

polling because of the low response rates on telephones. I’m not suggesting

anyone is going to be doing door-to-door polling in the near future. But the

thing is they don’t use online panels because they aren't random, they’re not

statistically reliable and that’s why telephone polling is always going to be

what you get from the party.

Monday 4 May 2015

Omnibus Polling: Premium Research Without the Premium Cost

Imagine that

you're the recently hired Director of Communications at a non-profit in

Toronto. Your firm is in desperate need of a new marketing and fundraising

strategy. You know that if you want your organization to succeed and grow, you

will need to find out what the people are thinking and how these attitudes, opinions, and behaviours affect your organization's well-being. There's just one problem -

one big problem: your organization has informed you that they don't have the

funds you need to take on the massive in-depth market research project that you

had envisioned.

Q: What

would you do in this situation?

A: Buy

yourself some space on an omnibus poll.

Just

because your organization's war chest is suffering, doesn't mean the quality of

your research should suffer. An omnibus

poll (also known as a syndicated study) is a public opinion survey with a

series of questions that are sold individually or in ‘blocks’ to

non-competing clients across a number of industries. To purchase questions on

an omnibus poll is an economical means by which businesses or

organizations can acquire modest amounts of data by paying a (relatively)

nominal cost for each question they wish to have answered. Depending on

what firm the research-buyer chooses, purchasing real estate on an omnibus poll

or syndicated study could cost anywhere from $750-$1000/question. Some firms

may be able to sell blocks of survey questions at a discounted rate.

The reader

should note that each market research or polling firm will have different means

of collecting, presenting, and sharing data. Some firms are transparent with

their findings and will allow all clients of a syndicated study to see what

each client has purchased and what the findings of those questions are.

Alternatively, some firms may wish to withhold data and will only show clients

the findings from questions for which those specific clients paid.

Additionally, some firms may simply give their clients the results of their

findings with no interpretation or analysis while other firms will provide

clients with some insight as to what the numbers they purchased mean. Each firm

will have access to different resources and methodologies that influence

their business model; it's important to ensure that if your organization

is interested in taking part in an omnibus poll that you find a firm that can

provide you with the most information at the best possible cost.

As a

Director of Communications, it might be upsetting that you may not able to obtain

all the information you wanted. However, being realistic and strategic about

the data you can afford can pay out dividends to your organization's short-term success.

There are too many small- or medium-sized businesses or organizations that would

benefit from market research and polling but simply do not have the funds

needed to take on such an expensive endeavor. Omnibus polling allows these

entities to change their research paradigm from "How much data can we

possibly get our hands on?" to "What kind of data is absolutely

essential to our organization's success?”. Furthermore, if you are able to find

a firm that is more transparent with their findings, your organization might be

able to acquire more data than the three or four questions that you paid

for.

When a

business or organization experiences a financial loss or setback,

it's common for the research budget to be the first to feel the swing of the

axe. In this example, you as the Director of Communications would be

able to cope with your organization’s unavailability of funds by sacrificing

the amount of data you wish to acquire instead of the quality of that data. Use your circumstances to find short-term opportunities and

build upon them to create long-term success.

Thursday 23 April 2015

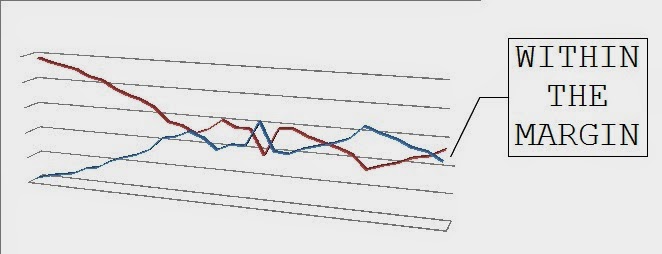

Media and Polling: You're Doing It Wrong

Forum Research is one of the most recognized

names in the world of polling and market research in Canada. Few firms have the

ability to pump out an Interactive Voice Response (IVR) poll with a decent

sample size and get an A1 over the fold in less than 24 hours. When I was at

the Ontario Legislature, a number of my former colleagues would wince

at even hearing the name Forum Research. They weren’t the only ones,

however; many believe that the frequency and tabloid-like nature of Forum

Research's publications actually hurts Canadian democracy and negatively

affects the public's view on public opinion polling at large. Torontoist Magazine best illustrated this through their political

cartoon found here.

Forum

Research should not be seen as the villain in this story, however. On the

contrary, they are merely a product of their environment. Polling in today's

economy is very difficult. There is a plethora of polling and market research

firms all competing to hear from a population that is getting harder to

reach every year. Low response rates and significant decreases in the use

of landlines are just a few of the realities that a pollster today has to deal

with that their predecessors did not have to.

In

order to generate business and distinguish themselves in the industry, some

firms conduct a study or poll and simply give the results (or at least the

top-line results) to a friendly media outlet at no charge. Why does this happen? Media outlets don't have the money they once

did to pay for public opinion polls. Polling is expensive no matter how you

slice it and declining sales and readership over the years have seriously

affected how much media outlets are willing or able to pay for this kind of

data. By providing public opinion data for free (or at very minimal cost),

polling firms have become marketers first and pollsters second.

Polling firms seem to promote their companies

as a brand as opposed to promoting the information they produce. When large or reputable media outlets publish

opinion polls, the firm conducting the poll gets earned media and publicity,

thus increasing their visibility and potential for future business. However, when fewer outlets can afford polling

data, pollsters

and media outlets ‘race-to-the-bottom’ in an attempt to mutually promote each

other’s products and services in the best way they can under the circumstances –

and it’s the Canadian public that ends up losing.

I don't think the public should point fingers

at Forum Research for their business model. It’s in the best interest of all

pollsters, regardless of what firm they work for or what political party they

are affiliated with, to be ‘correct’ or 'accurate' in their representation of

what the public thinks. What I think critics of Forum Research or polling in

general should focus on, however, is the manner in which public opinion data is

digested and regurgitated by the media.

The evolution in how the media

covers politics and public affairs over the years has created a dependency

on horse-race polling or the idea that there is always an election no matter

the status of the legislature or how far out the election actually is. By focusing on the top line numbers of a poll and

not the specifics within it, media outlets do the public an injustice. Intentional

or not, this practice creates a less informed electorate even though outlets may actually

believe that they are informing them. This also leads me to believe that media

outlets are more in the business of creating

the news than reporting the news.

It

is possible that the media has a real dependency on polling to sell papers or generate

website traffic – and polling firms need to get that A1 over the fold to get

noticed. These two players are both in a bind, and we as Canadians should

understand what this relationship means to the discussion and evolution of

ideas and policy.

Many journalists do not know how to critically

examine public opinion polls and even if they did have that knowledge,

editorial staff do not believe that this knowledge is relevant to the narrative

that the paper is trying to sell. Journalists have frequent and tight

deadlines, and when a press release crosses their desk with only so much as a basic

explanation of what the numbers say or mean, the report of what the public actually thinks begins to suffer.

I'm not

painting all journalists with the same brush – on the contrary, there are a

number of former journalists in Canada who have gone on to become successful pollsters.

However, it is important when a journalist writes an article and references a

poll to inform their readers why they were given this poll, if they had to pay

for it, the methodology behind the poll - besides the margin of error, and why

they chose to use this poll in their article.

Polling may not be a science like chemistry or

physics, but there is a lot of methodological and statistical rigor that goes

into polling. Forum Research is just one of many trying to make a buck in a

very tough and competitive environment. The polling industry today is making a

serious effort to ensure that firms and researchers disclose their methods as

best as possible and to be transparent with their findings. Media outlets

should follow and move away from superfluous horse-race coverage simply to

sell papers and switch their focus to explaining why a certain poll they

chose to work with is valid or significant. When media outlets increase their focus on the mechanics

of polling the Canadian public stands a greater chance of being informed.

Thursday 5 March 2015

Municipal Polling: Is it Feasible?

Public opinion polling can provide

information as to how different segments within the electorate view issues,

parties, or candidates. Every year, political parties in Canada both federally

and provincially spend hundreds of thousands of dollars on market research and

public opinion related services to help shape their communication strategies

and campaign platforms. When it comes to municipal politics, however, polling

can be a trickier matter.

Poll after poll, Toronto's mayoral

candidates in 2014 jockeyed for the lead as media outlets intensified their

coverage and voters became more alive to the issues. But why is it that we hear

about people's attitudes and opinions towards mayoral candidates but not City

Council candidates? How hard could it be to ask residents of a certain area how

they view their City Councillor?

It’s actually a lot harder than one

would think, both in theory and practice.

One of the major problems with which

the polling industry is grappling today is low response rates. Today's public

has very little patience to participate in public opinion surveys and often

confuse pollsters with telemarketers. It’s quite difficult for pollsters to

find out what people are thinking when more and more of them are refusing to

share their opinions. Twenty or thirty years ago pollsters could acquire a

response from roughly six or even seven out of every ten households contacted.

This meant that polling could be done relatively quickly and cost-effectively.

Today some polls only receive responses from 2 out of every 100 households!

Almost every household had a land

line or home phone, meaning that a vast majority of the population (especially

voters) could be reached at home. Today, however, fewer and fewer households

have land lines, making people harder to contact. Mobile devices or cell phones

have begun (and will continue) to replace landlines as the most common means of

contact. Furthermore, many households are becoming cell phone only households,

especially among new Canadians and young Canadians ages 18-34. Pollsters today

must adjust their sampling frames to accommodate the widespread and

continuously growing use of mobile devices to avoid biases in their sampling.

However, blending or mixing land lines and cell phones is not a simple task.

The problems surrounding cell phone polling in a municipal context will become

more evident once we take a look at the sample situation below:

Assume for the moment that I have a

client who was running for Toronto City Council. My client has enough funds in

their war chest to conduct an Interactive Voice Response (IVR) poll with a

sample size of 500. With today's low response rates, it’s very plausible our

hypothetical poll would receive a response rate in the very low single digits.

Hypothetically, say I acquired a response rate of 3% (pretty reasonable for an

IVR poll!). This means that, in theory,

I would have dialed 16,666 phone numbers to get the 500 people needed to

complete the survey. However, just because someone is willing to take the

survey doesn't necessarily mean that they are eligible to vote. In this

situation, eligibility to vote is what we would call our incidence rate or the rate at which we are actually talking to the

people we need to be talking to. The incidence rate affects the logistics of

the poll (and client's invoice) because 16,666 numbers had to be called in

order to interview 500 eligible voters - not to mention the phone numbers

purchased from a vender but were unable to produce a response because they were

either out of service or belonged to a business.

Our problems aren't over yet. When

it comes to using landlines, it’s possible for a pollster to isolate a

geographic area by using the phone book. However, cell phones are not listed in

the phone book so in Toronto it is not possible to narrow down a cell phone

number to a specific section of the city before contacting it. A pollster can

conduct a city-wide municipal poll for a mayoral candidate by incorporating

cell phone numbers into their sampling frame with relatively little hassle

because it is possible to purchase lists of mobile numbers but all you know

about the number is that, if it’s a live number, it probably corresponds with

individuals residing within the City of Toronto (some people may have a Toronto

number but live outside of the city, but we can ignore this complication for

our example). Conducting a municipal poll for a City Council candidate is quite

difficult as we are not able to isolate cell-phone respondents who live within

a specific ward within the city. Leaving out cell phone respondents could bias

my sample and the results my client paid for would be skewed.

There are 44

wards in Toronto, so assuming that we wish to have 15% of our sample consist of

cell phones that means we need 75 cell phone respondents, and assuming our 3%

response rate, that means if we knew only the cell phones for our ward we would

need to contact 2500 individuals. But we have a second problem, roughly 1 out of

every 44 people that agrees to do our survey will live in the ward we want so

to get those 75 respondents we will actually need to contact 110,000 cell

phone numbers. And here’s one more caveat, cell phone number lists often also include

numbers that are not connected – that is they are assigned to be a cell phone

number, but no one has purchased that specific number yet, so we will need even

to call even more than 110,000 numbers! This would be both time consuming and quite expensive for my client.

For these reasons it is important

for municipal candidates to understand that they are uniquely situated within

the world of electoral politics and public opinion. They face a set of

challenges that their mayoral colleagues do not and they need to take these

obstacles into account if they are serious about conducting market research.

Tuesday 5 August 2014

Comparing NDP Performance in the 416 during the 2011 and 2014 Provincial Elections

The 2014

Ontario Election set an interesting tone for the NDP in downtown Toronto. Of

the five ridings in the 416 that were held by the NDP, only two were able to

survive the Ontario Liberal Party's surge to a majority government. Ironically,

the NDP's rightward positioning in their election platform resulted in

victories in the ridings of Windsor-West, Sudbury, and Oshawa. Each of these

ridings play host to large concentrations of working-class individuals and

families.

So what

happened to the NDP in Toronto? My personal riding of Trinity-Spadina alone saw

the Ontario Liberals beating long-time NDP incumbent Rosario Marchese by 9,200

votes. Marchese had represented the area since 1990 after defeating Liberal

candidate Bob Wong in what was then the riding of Fort York.

I wanted to

take a deeper, more quantitative look at what took place in the four individual

ridings that the NDP lost to the Liberals in downtown Toronto. Borrowing from

the work of Ryan Pike, a graduate student at the University of Calgary and a

contributor to Tom Flanagan's analysis of the 2012 Calgary-Centre by-election

in his book Winning Power, I examine the geographic tendencies that

occurred in the distribution of the vote at the polling district level between

the two provincial elections of 2011 and 2014. Statistical correlations were

used to examine the percentage of the vote each party received in each polling

district in four downtown Toronto ridings with itself and with the other major

parties. The correlation coefficient tables can be found below.

The reader

should note that this analysis was made possible due to the relative similarity

of the boundaries identifying polling districts in each riding for both

elections. On average, there were roughly five or so more polling districts in

2014 than there were in 2011. However, this was adjusted for by merging

geographically similar polls to create an equal number of polling districts for

both election years.

The four

ridings that this analysis looked at include Trinity-Spadina, Davenport,

Beaches East-York, and Parkdale High-Park. Toronto-Danforth was not chosen for

this analysis as the NDP incumbent Peter Tabuns significantly outperformed his

colleagues in the 416 and had won by a (relatively) comfortable margin of 3,200

votes. Although NDP candidate Cheri DiNovo won her riding of Parkdale-High

Park, her margin of victory was very slim.

When we

examine how well each party did in 2011 with itself in 2014, all parties

experienced primarily large, positive, and highly statistically significant

relationships. This means that the relative patterns of strength

remained similar for each party, even if the absolute numbers showed

that voters were more favourable of the OLP. This is an interesting finding as

the NDP received far fewer votes in three of four ridings in 2014 even if

polling districts show large strong NDP correlations.

One of the

more interesting finding from this examination shows that in all four ridings,

the correlation strength between the 2011 and 2014 NDP performance is not only

large - its larger than the OLP's correlation with its own performance in the

same election years. Analysis shows that the NDP tended to do rather well in the

same polling districts in which they had done well in 2011. This means that

geographically, long-time NDP voters were more likely to support their own

party than traditional Liberal voters supporting the OLP, but there were not

enough of these strong/loyal NDP supporters in each of these ridings for the

NDP to maintain control. It should be noted that turnout was roughly 3% higher

in the 2014 election than in the 2011 election. These “new” 2014 voters may have

largely voted Liberal in these ridings which may help explain the final

results.

The analysis

found that in two of the four ridings, there was a stronger negative correlation

between 2011 Liberal voters and voting for the NDP in 2014, than 2011 NDP

voters voting for the OLP in 2014. This means that someone who voted Liberal in

2011 was statistically less likely to vote for the NDP in 2014 as opposed to

someone who voted NDP in 2011 voting for the Liberals in 2014.

Initially I

was quite confused as to why there was such a strong positive relationship

between the 2011 and 2014 NDP vote. However, I took a look at some publicly

released polls to help situate my findings within the attitudes and beliefs of

the electorate. Abacus Data's June 11th poll in which 69% of

respondents who voted NDP in 2011 stated that they would vote NDP in 2014,

while 73% of individuals who voted OLP in 2011 stated that they would vote OLP

in 2014. This finding in particular may help explain my findings in the

paragraph above.

Taking the

above into account, how could the NDP perform well in the same polling

districts in 2014 as in 2011 but still lose three (almost four) of their five

ridings? I believe that these losses may have been the result of the overall

number of 'weak' NDP supporters in the downtown area. Almost every NDP

candidate across the 416 heard virtually the same thing from supporters at the

door: “I usually vote NDP but I don't know if I can again in this election.

This budget is just too good. Why did we call this election in the first place”?

The strong, positive and significant findings from the analysis shows that

die-hard NDP supporters did in fact come out to support their party on Election

Day, but the actual election results show that a large number of those who

voted NDP in 2011 were weak enough in their convictions that they chose to vote

against their party in favour of the Liberals. The polling districts that the

NDP did well in during the 2014 election were largely home to stronger NDP areas

of the city but there were not enough strong NDP voters throughout the riding

to guarantee an NDP win.

There are

many reasons why large numbers of downtown Toronto NDP supporters could have

defected in this past election. One reason may be that these weak NDP

supporters may have voted with their wallets by supporting a budget that they

believed would serve them best. Alternatively, 416 NDP supporters may have been

upset with Andrea Horwath's leadership and performance in the legislature -

more specifically her decision to dissolve the legislature and trigger the

election, especially when she was presented with a budget that (theoretically)

satisfied much of the NDP base. Both of these explanations can be rooted in the

Bloc-Recursive Model's assumptions that leadership evaluation and economic

considerations are among the two biggest variables that influence the way a

voter casts their ballot and may help explain why so many NDP supporters

defected en masse.

Geography,

however, might be a much more useful variable to take into account. More

specifically, I am referring to the very staunch divide between Ontarians

living in Toronto and the rest of Ontario. Due to Toronto's urban and

metropolitan nature, weak NDP partisans in the downtown 416 may have identify

themselves as being progressive first and with a political party second.

This segment of voters may have seen the NDP in 2011 as best representing

their progressive values, however, these voters may have been both seduced by

the Liberal Party's framing and disciplined communication of the their

'progressive' budget while being dismayed by the NDP's rightward positioning in

their platform. This Toronto-elsewhere paradigm might also explain why gains

were made in working-class ridings outside of the GTA, even as the NDP was

positioning itself more to the right. Although further research is needed, I

would assume that Torontonians are more likely to identify themselves as progressive as opposed to other urban

centres throughout the province. The more distant ridings of Windsor, Sudbury

and to a certain extent Oshawa saw voters who held different attitudes,

beliefs, and norms than those of the 416. Correlation coefficient analysis of

these ridings would be beneficial to help confirm this.

Another

interesting finding to note is that in all four ridings, there is a positive

correlation in 2011 and 2014 polling districts between the OLP and PCs. One

reason why this may be the case is that, on average, there were a number of

voters in both PC and OLP camps may have casted their ballot for the party to

the left/right. These defections, however, were not large enough to impact

final results. These leftward shifts by 2011 PC voters may have also contributed

the OLP successes in these ridings.

Although

polling data would provide more insight, I personally believe this 'blending'

of the two parties may have been a result of the following: Liberals may have

been angry at their government's mismanagement (or certainly perceived

mismanagement) of the government. Alternatively, PC supporters may have been

angry with Hudak himself (leadership evaluation) or his party's poorly

communicated austerity policies (economic considerations). However, it is

important to note that only three of these correlations were statistically

significant. PC supporters were more likely to go towards the OLP than any

other party while Greens were more likely to go to the NDP more than any other

party.

All

poll-level data for this analysis was obtained from the official 2011

Provincial Election results and the unofficial 2014 Provincial

Election Results from Elections Ontario.

Trinity

Spadina

Lib 11

|

NDP 11

|

PC 11

|

Green 11

|

|

Lib 14

|

.24***

|

-.23***

|

.14*

|

-.08

|

NDP 14

|

-.40***

|

.44***

|

-.35***

|

.13*

|

PC 14

|

.39***

|

-.42***

|

.41***

|

-.31***

|

Green 14

|

-0.26***

|

.18**

|

-.25***

|

.51***

|

*

Sig. <.05 ** Sig. <.01 *** Sig. <.001

Source:

Elections Ontario

Davenport

Lib 11

|

NDP 11

|

PC 11

|

Green 11

|

|

Lib 14

|

.52***

|

-.49***

|

.11

|

-.26***

|

NDP 14

|

-.49***

|

.55***

|

-.27***

|

.22**

|

PC 14

|

.28***

|

-.45***

|

.43***

|

-.06

|

Green 14

|

-.44***

|

.44***

|

-.12

|

0.18*

|

*

Sig. <.05 ** Sig. <.01 *** Sig. <.001

Source:

Elections Ontario

Beaches

East-York

Lib 11

|

NDP 11

|

PC 11

|

Green 11

|

|

Lib 14

|

.58***

|

-.40***

|

-.27***

|

.06

|

NDP 14

|

-.54***

|

0.64***

|

-.18*

|

-.16*

|

PC 14

|

.05

|

-0.42***

|

.66***

|

-.01

|

Green 14

|

.08

|

-.16

|

.02

|

.40***

|

*

Sig. <.05 ** Sig. <.01 *** Sig. <.001

Source:

Elections Ontario

Parkdale

High-Park

Lib 11

|

NDP 11

|

PC 11

|

Green 11

|

|

Lib 14

|

.58***

|

-.65***

|

.48***

|

-.21**

|

NDP 14

|

-.53***

|

.79***

|

-.72***

|

.19**

|

PC 14

|

.27***

|

-.67***

|

.78***

|

-.17*

|

Green 14

|

-.05

|

.03

|

-.11

|

.33***

|

*

Sig. <.05 ** Sig. <.01 *** Sig. <.001

Source:

Elections Ontario

Subscribe to:

Posts (Atom)